In a new era where artificial intelligence is increasingly becoming a cornerstone of digital strategy, questions around AI regulation, especially in highly sensitive sectors like healthcare and finance, are growing louder.

Even with how progressive AI development has been in the last year, it only intensifies the big questions that businesses are asking about how AI-written content ought to be regarded in highly regulated spaces.

In this post, we’ll dissect the utility of generative AI, with a specific focus on how AI regulation could slow the pace of its use.

The Challenge of YMYL Content

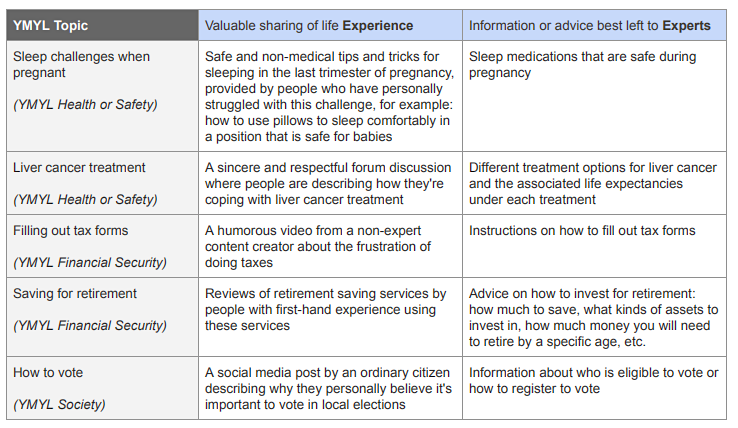

Your Money or Your Life (YMYL) is a term that Google uses to categorize web pages that could potentially impact a person’s future happiness, health, financial stability or safety:

As you might imagine, there’s a lot of challenges that come with creating AI-written content in YMYL-regulated spaces like healthcare and finance.

These are sectors where misinformation can have severe consequences and, thus, they are heavily scrutinized by search engines and social media platforms. Now, with the heightened use of AI, there’s a valid concern that more “AI chaff” will be filling people’s eyes and ears on topics that require highly researched and informed perspectives.

The Risk Factor of AI Content: Why Authority Matters

One of the key things to realize here is the role of authority in content performance.

If a website is already authoritative and well-known in its niche — think WebMD in healthcare — the AI-generated content tends to perform well. However, for websites that are still building their authority, the performance of AI-written content is notably less impressive.

This raises an important question: Why do search engines like Google and social media platforms like Facebook care so much about authority in these sectors?

The answer lies in the potential risks involved. Imagine a website advising you to invest all your money in a new cryptocurrency called “NeoCoin,” promising you’ll become a trillionaire. If someone took that advice and lost their life savings, the repercussions would be severe, not just for the individual but also for the platforms that allowed such content to be disseminated.

This is why these platforms are cautious: They don’t want to be held accountable for promoting misleading or harmful information.

Building Trust Takes Time

Whether the content is AI-generated or human-written, building trust and authority in YMYL sectors is a long-term commitment. It’s not just about producing high-quality content. It’s also about consistently delivering value and gaining recognition as a reliable source of information over time.

Once you’ve established yourself as a clear, reliable source of genuine information that isn’t simply an amalgam of other commentaries and studies regurgitated through AI content, then you can start tinkering with the use of AI-assisted content that supplements human material and thoughts.

Dive Deeper:

* Absolutely Everything You Need to Know About 10x Content

* Google E-E-A-T: How to Signal That You’re an Experienced Content Creator

The Regulatory Landscape

Although authority and trust are important, it’s crucial to understand that these are not the only factors at play. Regulatory bodies and politicians are increasingly scrutinizing the role of AI in content creation, especially in sensitive sectors.

Government Oversight

The risk of misinformation has led to calls for stricter regulations on AI-written content. The U.S. Government is keen to ensure that AI does not become a tool for spreading false or misleading information, which could have societal implications. This is why platforms like Google and Facebook are cautious — they don’t want to attract unwanted attention from regulators.

It’s also worth noting that AI regulation is not confined to a single country or region. International bodies like the European Union are also stepping in with their own sets of regulations, such as:

The Artificial Intelligence Act, the world’s first comprehensive AI law which aims to create a unified approach to AI governance.

This adds another layer of complexity for platforms and content creators who operate globally.

User Experience

Beyond the legal implications, there’s also the matter of user experience. Platforms want to ensure that the content they promote enhances user engagement and trust. Poorly written or misleading content, whether AI-generated or not, detracts from this goal.

Dive Deeper: How to Write Content for People and Optimize It for Google

The Future of AI in Regulated Sectors

So, what does the future hold for AI-written content in regulated industries?

Websites with established authority in their respective niches are likely to find success with AI-generated content. This is a crucial point, as it suggests that the future of AI in regulated sectors may be less about the technology itself and more about how it’s implemented within the context of existing trust and credibility.

However, for emerging platforms or those still in the process of building their authority, the journey is fraught with challenges. These range from gaining user trust to navigating the intricate web of regulations that govern these sectors. It’s a long road, but one that is not without its rewards for those who navigate it successfully.

Innovations on the Horizon

The future of AI in regulated sectors is a dynamic landscape, shaped by a blend of technological innovation, regulatory shifts and evolving user expectations. While established authority will continue to play a significant role, it’s clear that adaptability and innovation will be equally crucial for success, such as:

- AI Ethics and Transparency: One of the most promising areas of innovation is in AI ethics and transparency. As AI systems become more sophisticated, there’s a growing need for mechanisms that can explain how these systems make decisions, especially when it comes to generating content in regulated sectors. Transparent AI could help build trust among users and regulators alike, making it easier for new platforms to gain credibility.

- Sector-Specific AI Solutions: Another avenue for innovation is the development of AI algorithms tailored for specific sectors. For instance, AI systems designed for healthcare could be trained to adhere to medical guidelines, while those in finance could be programmed to comply with financial regulations. These sector-specific solutions could provide a more nuanced approach to content creation, making it easier for platforms to align with regulatory requirements.

- Real-Time Regulatory Compliance: Imagine an AI system that could adapt its content in real-time based on changing regulations. This is not as far-fetched as it sounds. With advancements in machine learning and natural language processing, future AI systems could be designed to monitor regulatory updates and adjust their algorithms accordingly. This would not only ease the burden on content creators but also enhance compliance, reducing the risk of penalties.

Final Thoughts on AI Regulation

AI regulation in content creation, especially in YMYL sectors, is a convoluted issue that involves multiple stakeholders, including search engines, social media platforms, regulatory bodies and the website owners themselves.

While authority and trust are key factors in content performance, they are part of a larger ecosystem that also includes legal considerations and user experience. As AI continues to evolve, so will the rules that govern its application, making it imperative for businesses to stay informed and adaptable.

How will you adapt your use of AI to imminent regulations surrounding its use?

If you’re ready to level up your content with AI tools, Single Grain’s AI experts can help!👇

For more insights and lessons about marketing, check out our Marketing School podcast on YouTube.